Beyond Redundancy: Why File Sharding Is a Security Feature, Not Just a Backup Strategy

Client-side encryption and file sharding split data across clouds, ensuring no single breach can expose complete files—true security beyond backups.

Backups are boring but essential. Every responsible business has some form of redundancy—copies of critical data stored in multiple locations. That protects you from hardware failure, accidental deletion, and the occasional disaster.

But redundancy doesn’t really change your security posture. If an attacker gets into one copy, they usually get into all of them. You’ve multiplied availability, not reduced risk.

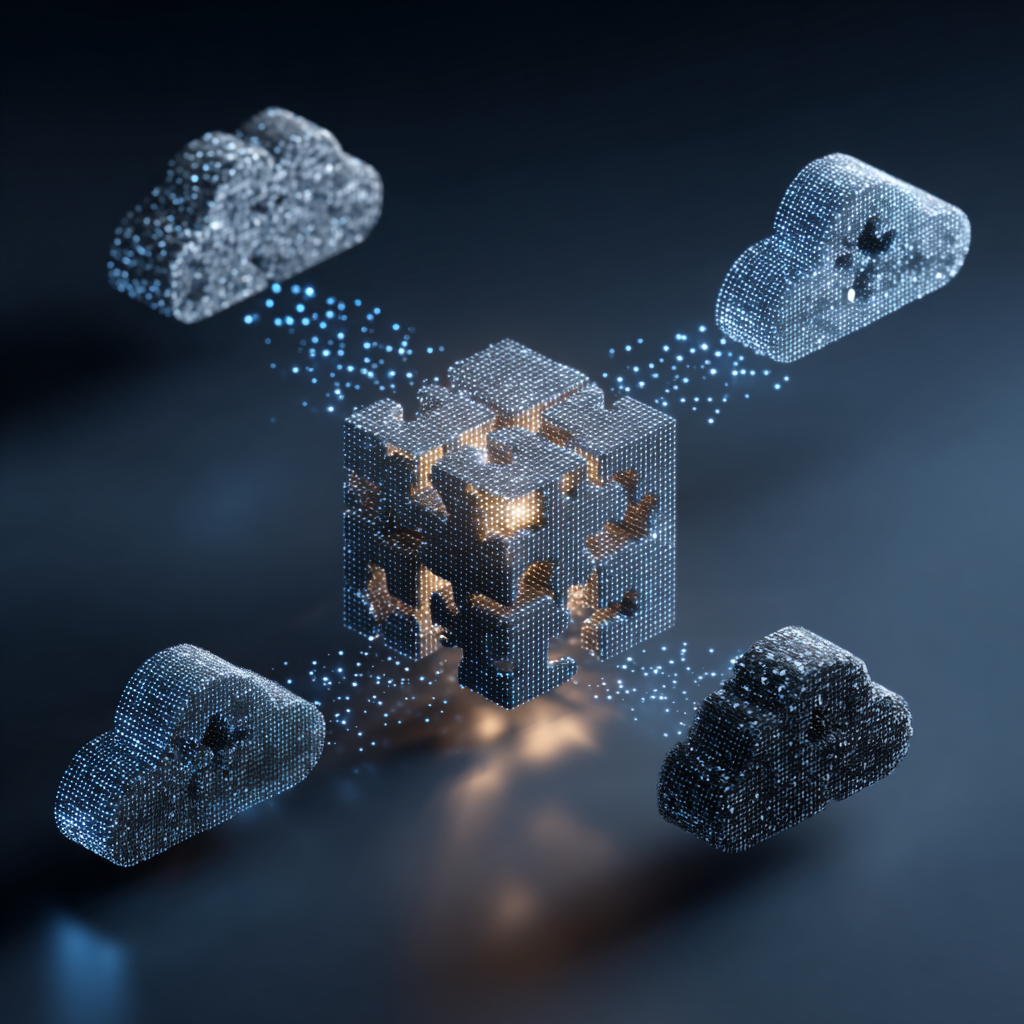

There is a different pattern that does change the risk equation: encrypting files on the client, then splitting that encrypted data into fragments and distributing it. This is not just “backup done better.” It’s a fundamentally different way of thinking about how your most valuable data should live in the world.

Redundancy vs. Encrypted Splitting: Two Different Worlds

Redundancy lives on a simple idea: take the same file, clone it, and store each clone in a different place. If one location fails, use another. Straightforward. Sensible. But from an attacker’s point of view, every extra copy is an extra target.

Encrypted file splitting takes a different path.

In a modern, privacy-first architecture, the sequence looks like this:

- The file is encrypted on the user’s device with a strong key that never leaves their control in plain form.

- The resulting ciphertext—the scrambled, unreadable blob—is then split into multiple cryptographic fragments.

- Those fragments are distributed across independent storage locations and providers.

At no point does any cloud provider or storage node ever see the plaintext file. And crucially, no single location ever holds the full ciphertext either. Each place only ever sees a slice of scrambled data that means nothing on its own.

Possessing one fragment gives you nothing: no readable text, no usable preview, no structural hints. Just encrypted noise.

Reassembling the file requires both a sufficient threshold of fragments and the correct decryption key held by the user or their organization. Remove either ingredient, and the attacker is left with useless bits.

Why Centralized Storage Becomes a Honeypot

Traditional cloud storage concentrates value. You send a complete file to a single provider. They might replicate it internally or mirror it across regions, but in essence there are one or more locations where the full ciphertext—and often the decryption keys—sit together.

For an attacker, that is appealing economics. Compromise one account, one admin, or one misconfigured bucket, and you might walk away with millions of documents. Even if everything is “encrypted at rest,” many platforms still control the keys, so encryption becomes a speed bump rather than a wall.

The result is a classic honeypot: huge payoff, focused target.

Client-side encryption followed by cryptographic splitting breaks that incentive. Breaching one storage node yields only a slice of ciphertext. Even if that node is in a major hyperscaler, the attacker cannot reconstruct the original file from that slice alone, and they don’t have the key. The expected return on compromise falls dramatically.

Multi-Cloud Distribution as a Security Control

Extend this model across multiple infrastructure providers, and the effect compounds.

One fragment might live on AWS, another on Azure, a third on Google Cloud, a fourth on another independent platform. Each provider sees only its own fragment: a segment of already-encrypted data with no context.

Even if a nation-state actor compromises an entire provider, they still don’t get anything they can use without:

- The other fragments from the other providers, and

- The decryption key, which remains with the user or organization.

Suddenly, “the provider was breached” stops being synonymous with “our data is gone.” You’ve structurally separated infrastructure risk from data risk.

From a CIO or CISO perspective, that also plays nicely with multi-cloud strategies you may already be pursuing for resilience and cost reasons. Only here, distribution is not just about uptime. It’s a security control in its own right.

How the System Knows How to Rebuild the File

Of course, once you split and scatter encrypted fragments, you need a reliable way to pull them back together—only for the right party, at the right time.

That is where a dedicated control layer, often driven by a blockchain or similar tamper-evident registry, comes in:

- It maintains an encrypted map of which fragments belong to which file.

- It records which storage locations hold which fragments.

- It logs who is allowed to trigger reconstruction and under what conditions.

The critical point: this map is itself encrypted and bound to the user’s key material. So even if someone can read the registry, they cannot interpret it without the right cryptographic keys. They may see that “something” is stored somewhere, but not what, not how to assemble it, and not how to decrypt it.

When an authorized user requests a file, their client:

- Authenticates and proves it holds the right key.

- Fetches the necessary fragments from multiple providers in parallel.

- Reassembles the ciphertext locally.

- Decrypts it back into the original file—again, on the client side.

The storage providers remain passive participants. They serve opaque fragments; they never see content.

What About Performance?

On paper, this can sound like a lot of machinery. In practice, a well-engineered system hides that complexity.

Fragments are fetched concurrently. Latency from one slow provider is masked by faster responses from others. Bandwidth is effectively pooled across multiple networks. From the user’s point of view, they drag and drop a file, and it uploads. They click to download, and it opens. The experience feels like any modern cloud tool—sometimes faster.

The difference isn’t in what they see. It’s in what attackers, providers, and even the platform operator can’t see.

Why This Belongs in an Executive Security Strategy

For CISOs, DPOs, and CIOs, the question is no longer just “Do we have backups?” or “Is our storage encrypted at rest?” Those are hygiene questions.

The more strategic questions are:

- If a provider is breached, what does the attacker actually get?

- Can any third party ever see full files, even in encrypted form, in one place?

- Is it technically possible for anyone but us to reconstruct and decrypt our most sensitive data?

Client-side encryption combined with cryptographic file splitting and multi-cloud distribution gives you a rare kind of answer: even when—not if—parts of the infrastructure fail or are compromised, your crown jewels remain mathematically unintelligible.

Redundancy keeps the lights on. Encrypt‑then‑split architecture keeps your secrets safe

Backups keep you running. Architecture keeps you secure.

TransferChain Drive uses client-side encryption, cryptographic file sharding, and multi-cloud distribution to ensure no single provider ever holds your complete data—or your keys.

No full files.

No central honeypots.

No easy wins for attackers.

Rethink storage. Reduce structural risk. Choose TransferChain Drive.